How Can a Web Developer Help the SEO Expert?

A successful website means a fast website that offers a superior User Experience and has excellent performance online. To be successful, the website must be built properly, adhering to the official and unofficial rules of the search engines. When a website is built, a web development expert or a team of developers is included in the start. However, once the website is launched, the website must be thoroughly analyzed to check whether it is optimized for the search engines. A team of SEO experts does such analyses.

It is safe to say that web developers and SEO experts working hand in hand is vital for the website’s successful launch and its fights against online competitors. Being a web developer myself, who also happens to be the CEO of a Web and SEO agency, I’ll try to explain how web developers can assist the SEO team in analyzing and optimizing the website.

What Is Website Analysis?

Website analysis is the analysis of the web site’s online performance in terms of speed, functionality, and quality of content. It is a process that reveals the issues that prevent the website from ranking high in SERPs (Search Engine Page Results). These issues can be of a technical or content-related nature. The web developers can help with the tech parts. Let’s explain it further.

Technical Website Analysis

The technical website analysis is performed by SEO experts with advanced tech knowledge of how a website functions. It covers a long list of technicalities that must be optimized:

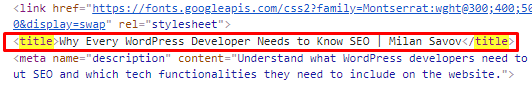

Knowledge of Important HTML Elements

The HTML elements affect how you show up in search results. These are the Title Tag, the Meta Description Tag, and the Images.

The SEO experts know the best practices when it comes to optimizing these elements, and they are:

The Title Tag should be between 50-60 characters, it should contain the relevant keywords, and it should be well-written as it directly influences the click-through rate (CTR).

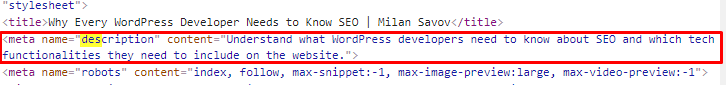

The Meta Description Tag should be around 155 characters long, unique, and contain main or secondary keywords relevant to the business. It should also be intriguing to read so that the searcher ends up clicking on the result.

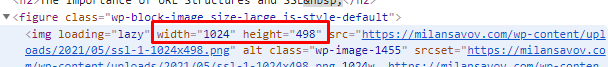

The Images should be optimized and compressed so that the site can load faster.

The tips from web developers that SEO experts should find helpful are:

- Properly set up the code of the Title Tag

- Properly set up the code of the Meta Description

- Explain that providing the image height and width in the code will improve the page speed.

Knowledge of Hyperlinks

Building links is the basics of every off-page SEO strategy. They can be acquired from backlinks from guest posts, commenting in forums and communities, blog post commenting, etc.

From a web-dev point of view there are three types of links

Text link

<a href="https://www.example.com/webpage. html">Anchor Text</a>

NoFollowed Link

<a href="https://www.example.com/webpage. html" rel="nofollow"> Anchor Text</a>

Image Link

<a href="https://www.example.com/webpage. html"><img src="/img/keyword.jpg" alt="description of image" height="50" width="100"></a>

The best practices for the hyperlinks would be:

- Nofollow links should be used for paid links and distrusted content

- Image links should have an optimised alt attribute that serves as the anchor text.

Knowledge of HTTP Status Codes

The Status Codes of all pages on one website show how many broken links and unnecessary redirects there are. The info about these pages can be collected from the Webmaster Tools. There are seven status codes that are important for SEO:

- 200 – OK/Success

- 301 – Permanent redirect

- 302 – Temporary redirect

- 404 – Not found

- 410 – Gone (permanently removed)

- 500 – Server error

- 503 – unavailable (retry later)

The web developer can point out the process of how an HTTP status code is changed. For example:

To change the 301 status code, you should use the .htaccess file, copy the following line of code into the text editor:

Redirect 301 / http://www.example.com/

… and replace the given URL with the URL you wish to forward your domain name to. Finally, save the file as .htaccess.

Another example – to correctly return the HTTP status code 404 for an error page, again, you should access the .htaccess file and enter the relative path to the error page. Note that the error page should be on the first level of the website (the root directory). Then type in

ErrorDocument 404 /404.html

Followed by the relative path to the error page. Then, save and request a page that doesn’t exist to check whether the correct HTTP status code 404 is returned.

Knowledge of Canonicalization

The canonical tag (“rel canonical”) tells the search engines that a specific URL represents the master copy of a page. When you use it, problems caused by duplicate content appearing on multiple URLs are prevented. The SEO experts use them to tell search engines which version of a URL they want to appear in SERP.

Web developers can execute the process in several ways

- rel=canonical <link> tag

Add a <link> tag in the code for all duplicate pages, pointing to the canonical page. This method is excellent for mapping an infinite number of duplicate pages, whereas it might add to the size of the page, and its functionality is limited as it works only for HTML pages

2. rel=canonical HTTP header

By sending a rel=canonical header in the page response. It won’t increase page size, and it can map an infinite number of duplicate pages. On the other hand, it can be a complex process to maintain the mapping on larger sites or sites with often changing URLs.

3. Sitemap

By creating a sitemap, you can specify the canonical pages, and this is very easy to do and maintain, especially for large sites. However, it is less powerful than the rel=canonical technique.

4. 301 redirect

This will tell Googlebot that the redirected URL is a better version than the given URL. It should be used only when deprecating a duplicate page.

Knowledge of URLs

SEO experts know that the best practices for URLs are:

- Shorter, readable, human-friendly URLs with descriptive keywords

- Content on the same subdomain to preserve the authority

Ex.

https://example.com/blog – recommended

https://blog.example.com – not recommended

- Excluding dynamic parameters

Web developers can explain the common URL elements and point out the bad and the good practices. First, here are all the elements of an URL

https://blog.example.com/category/keyword?id=123#top

- https:// – protocol

- blog – subdomain

- example – root domain

- com – top-level domain

- category – subfolder/path

- keyword – page

- id=123# – parameter

- top – named anchor

Bad practices:

- Page identification hashes (when unique pages are identified with a long string of random characters, something like this: 5GOC569C-5DDG-4A9D-9515-532VODA039F.html)

- Session hashes (not bad when used for pages, but hashes used for sessions should be avoided as they hurt the SEO.)

- File extensions (the URLs should be free of .php, .aspx, etc.)

- Non-ASCII characters (accented Latin and non-basic punctuation should be avoided)

- Underscores (no tangible benefits over hyphens and have poor usability and SEO value)

Good practices:

- Robust URL mapping (will solve the problem of having a very long URL including full date and long title showing in two lines) It works by embedding a unique ID early in the path, after which you can have a long, descriptive URL. The CMS will use only the ID value to do a successful lookup, so it won’t matter if the URLwraps onto two lines. There is a disadvantage, though; the IDs are not human-friendly.

- Hackable URLs, where part of the path can be adjusted or removed, enabling better navigation around the pages to the visitors on the site.

Example

example.com/blog/2021/05/20/title

Each forward slash should produce expectable results –

example.com/blog/2021/05/20 – overview of all May 2021’s posts

example.com/blog/2021/ – overview of all 2021’s posts

example.com/blog/ – show the latest updates in the blog section, regardless of their publication date

Knowledge of Robots Exclusion

SEO practices say that only Meta Robots and X-Robots-Tag can remove URLs from search results. However, SEO experts should know that they shouldn’t block the CSS or javaScript files with robots.txt files.

Here is basic info about these files:

Robots.txt

Location: https://example.com/robots.txt

User-agent: googlebot

Disallow: /example.html

Sitemap: https://example.com/sitemap.xml

X-Robots-Tag

Location: Sent in the HTTP headers

X-Robots-tag: noindex

Meta Robots

Location: In the HTML <head>

<meta name=”robots” content= “ [PARAMETER] ”

The important parameters show:

- Noindex (do not index)

- Nofollow (do not follow links)

- Noarchive (do not show cache)

When the robots <META> tag is not defined, the default is “INDEX, FOLLOW”

Noindex URLs in robots.txt shouldn’t be blocked.

Knowledge of Sitemap Syntax

The default location of an XML Sitemap is https://example.com/sitemap/xml

SEO experts should know that a sitemap cannot contain over 50.000 URLs. If they need to optimize such large websites, they should create multiple sitemaps listed under a single sitemap index file.

Here is how a sitemap index file looks:

<?xml version="1.0" encoding="UTF-8"?> <sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"> <sitemap> <loc>https://example.com/sitemap1.xml.gz</loc> <lastmod>2019-01-01T18:23:17+00:00</lastmod> </sitemap> <sitemap> <loc>https://example.com/sitemap2.xml.gz</loc> <lastmod>2019-01-01</lastmod> </sitemap> </sitemapindex>

Knowledge of Rich Snippets and Structured Data

By using schemas, the search engines will better understand the content and thus, enhance the search results. The common Structured Data Types are

- Local business

- FAQ page

- Person

- How to

- Product

- Article

- Recipes

- QA page

Here is an example of how a rich snippet for a review is coded:

<script type="application/ld+json">

{

"@context": "http://schema.org/",

"@type": "Review",

"reviewBody": "The restaurant has excellent ambiance.",

"itemReviewed": {

"@type": "Restaurant",

"name": "Fine Dining Establishment"

},

"reviewRating": {

"@type": "Rating",

"ratingValue": 5,

"worstRating": 1,

"bestRating": 5,

"reviewAspect": "Ambiance"

}

}

</script>

Performance-related Knowledge

Of course, how a website performs is one of the critical factors of its online success. The performance of a site hugely depends on its page speed. Web developers share the following tips for speeding the website:

- The code should be compressed and minified

- The page redirects should be reduced

- Render-blocking JavaScript should be removed

- Tree shaking should be used

- Browser caching should be leveraged

- CDN should be used

When it comes to modern JavaScript Sites, here is what one should do:

- JavaScript bundles should be kept small, especially for mobile devices, as they improve speed, lower memory usage, and reduce CPU costs

- Server-side pre-rendering should be used to improve the speed, UX, and the crawler accessibility

- Test the runtime performance and network “throttling”

Conclusion

Web developers’ expertise should guide the process of implementation of the SEO recommendations and suggestions. SEO experts know what will make the site better optimized, and the web developers can execute it using the best web development practices they’ve learned in their experience. It is safe to say that working hand in hand is how the relationship between web developers and SEOs can be explained.